ZFS logbias property

ZFS Synchronous write bias in short logbias property is to provide a hint to ZFS about handling of synchronous requests in particular dataset. If logging is set to "latency" (the default) ZFS will use pool log devices i.e. ZIL (if configured) to handle the requests at low latency/delay. If logging is set to "throughput" then ZFS will not use configured pool log devices kind of a separated ZIL functionality of ZFS. ZFS will instead optimize synchronous operations for global pool throughput and efficient use of resources.

Basically ZFS logbias is proposed especially for managing performance of Oracle database.

Oracle manages two major types of files, the Data Files and the Redo Log files.

Writes to Redo Log files are in the course of all transactions and low latency/delay is a vital requirement. It is critical for good performance to give high priority to these write requests.

Data Files are also the subject of writes from DB writers as a form of scrubbing dirty blocks to insure DB buffer availability. Write latency/delay is much less an issue. Of more importance is achieving an acceptable level of throughput. These writes are less critical to delivered performance.

Both types of writes are synchronous (using O_DSYNC), and thus treated equally. They compete for the same resources: separate intent log, memory, and normal pool IO. The Data File writes slow down the overall potential performance of the critical Redo Log writers.

So in short - Redo Logs need short latencies/delays, so you would use zfs set logbias=latency oradbsrv1-redo/redologs. The data files of oracle need throughput, so you would configure zfs set logbias=throughput oradbsrv1-data/datafile.

If logbias is set to 'latency' (the default) then there is no change from the current implementation. If the logbias property is set to 'throughput' then intent log blocks will be allocated from the main pool instead of any separate intent log devices (if present). Also data will be written immediately to spread the write load thus making for quicker subsequent transaction group commits to the pool. This is the important change of setting logbias to throughput property.

For Oracle datafiles specifically, using the new setting of Synchronous write bias: Throughput has potential to deliver more stable performance in general and higher performance for redo log sensitive workloads.

REF:

http://arc.opensolaris.org/caselog/PSARC/2009/423/20090807_neil.perrin

http://www.c0t0d0s0.org/archives/5838-PSARC2009423-ZFS-logbias-property.html

Hello Friends, This is Nilesh Joshi from Pune, India. By profession I am an UNIX Systems Administrator and have proven career track on UNIX Systems Administration. This blog is written from both my research and my experience. The methods I describe herein are those that I have used and that have worked for me. It is highly recommended that you do further research on this subject. If you choose to use this document as a guide, you do so at your own risk. I wish you great success.

Find it

Wednesday, December 30, 2009

Monday, December 28, 2009

How to expand Solaris Volume Manager filesystem which is exported to zones from Global Zone

Ok, it's been long that no updates on blog... Anyways, Today I'm having some good information on - "How to expand Solaris Volume Manager (Metadevice) filesystem which is exported to zones from Global Zone"

I've a uniqe system which is having little diffrent configuration than other systems. I've SPARC-Enterprise M4000 system and having 2 zones running on it. Here is the zone configuration example for one of them.

# zonecfg -z zone1 info

zonename: zone1

zonepath: /zone1/zonepath

brand: native

autoboot: true

bootargs:

pool: oracpu_pool

limitpriv: default,dtrace_proc,dtrace_user

scheduling-class:

ip-type: shared

[cpu-shares: 32]

fs:

dir: /oracle

special: /dev/md/dsk/d56

raw: /dev/md/rdsk/d56

type: ufs

options: []

fs:

dir: /oradata1

special: /dev/md/dsk/d59

raw: /dev/md/rdsk/d59

type: ufs

options: []

fs:

dir: /oradata2

special: /dev/md/dsk/d62

raw: /dev/md/rdsk/d62

type: ufs

options: []

fs:

dir: /oradata3

special: /dev/md/dsk/d63

raw: /dev/md/rdsk/d63

type: ufs

options: []

[...]

zonename: zone1

zonepath: /zone1/zonepath

brand: native

autoboot: true

bootargs:

pool: oracpu_pool

limitpriv: default,dtrace_proc,dtrace_user

scheduling-class:

ip-type: shared

[cpu-shares: 32]

fs:

dir: /oracle

special: /dev/md/dsk/d56

raw: /dev/md/rdsk/d56

type: ufs

options: []

fs:

dir: /oradata1

special: /dev/md/dsk/d59

raw: /dev/md/rdsk/d59

type: ufs

options: []

fs:

dir: /oradata2

special: /dev/md/dsk/d62

raw: /dev/md/rdsk/d62

type: ufs

options: []

fs:

dir: /oradata3

special: /dev/md/dsk/d63

raw: /dev/md/rdsk/d63

type: ufs

options: []

[...]

Ok, So here you can see that I've metadevices which are exported to the zone from the global zone. I need to expand one of the filesystem say /oaradata1 by XXG so how am I going to perform this? Take a look at below procedure to understand on how we can do it.

global:/

# zonecfg -z zone1 info fs dir=/oradata1

fs:

dir: /oradata1

special: /dev/md/dsk/d59

raw: /dev/md/rdsk/d59

type: ufs

options: []

global:/

# metattach d59 Storage_LUN_ID

global:/

# growfs -M /zone1/zonepath/root/oradata1 /dev/md/rdsk/d59

# zonecfg -z zone1 info fs dir=/oradata1

fs:

dir: /oradata1

special: /dev/md/dsk/d59

raw: /dev/md/rdsk/d59

type: ufs

options: []

global:/

# metattach d59 Storage_LUN_ID

global:/

# growfs -M /zone1/zonepath/root/oradata1 /dev/md/rdsk/d59

This all operation needs to be performed from global zone.

Sunday, December 20, 2009

Removing ^M from unix text files

Removing ^M from unix text files

Using Perl :

Following command will change the orginal file itself so keep a backup copy .

perl -pi -e "s:^V^M::g"

You won't see the Control V on typing but it is needed to generate control

character ^M.

Using sed :

# sed -e `s/^V^M//g` existing_file_name > new_file_name

Using vi :

Open file in vi and enter the following at : prompt in command mode .

:%s/^V^M//g

BTW There's also a utility dos2unix and unix2dos that you can use to convert characters to and fro DOS and UNIX.

Using Perl :

Following command will change the orginal file itself so keep a backup copy .

perl -pi -e "s:^V^M::g"

You won't see the Control V on typing but it is needed to generate control

character ^M.

Using sed :

# sed -e `s/^V^M//g` existing_file_name > new_file_name

Using vi :

Open file in vi and enter the following at : prompt in command mode .

:%s/^V^M//g

BTW There's also a utility dos2unix and unix2dos that you can use to convert characters to and fro DOS and UNIX.

Sunday, December 6, 2009

Small MPxIO tip

This is a relatively undersized tip however equally important on MPxIO.

I was always wondering on how to know that MPxIO is running/enable or not and what all kernel modules it loads when it is enabled and how to identify if MPxIO is enable using MPxIO kernel module.

One way that you can tell if your MPxIO enable or not is -

# stmsboot -L

If MPxIO is not enabled then this command returns message that MPxIO is not enabled which is very straight forward & then using stmsboot –e command to enable to MPxIO, BTW which is always followed by reboot.

Yet another way to know if MPxIO is enabled or not is -

# modinfo |grep -i vhci

22 1338650 15698 189 1 scsi_vhci (SCSI VHCI Driver 1.57)

If this module is loaded then it means MPxIO is running.

I was always wondering on how to know that MPxIO is running/enable or not and what all kernel modules it loads when it is enabled and how to identify if MPxIO is enable using MPxIO kernel module.

One way that you can tell if your MPxIO enable or not is -

# stmsboot -L

If MPxIO is not enabled then this command returns message that MPxIO is not enabled which is very straight forward & then using stmsboot –e command to enable to MPxIO, BTW which is always followed by reboot.

Yet another way to know if MPxIO is enabled or not is -

# modinfo |grep -i vhci

22 1338650 15698 189 1 scsi_vhci (SCSI VHCI Driver 1.57)

If this module is loaded then it means MPxIO is running.

Monday, November 30, 2009

Using Live Upgrade utility - migrate Solaris 10 UFS with SVM to ZFS root mirrored configuration.

Using Live Upgrade utility - migrate Solaris 10 UFS with SVM to ZFS root mirrored configuration.

Introduction:

I’m having a server running Solaris 10 [Solaris 10 10/09], upgraded from Solaris 9 using LiveUpgrade. Currently the root filesystem is using UFS and has Solaris Volume Manager implemented with Concat/Stripe mirror configuration.

I'm going to use Live Upgrade utility to upgrade UFS to ZFS. I have 2 internal disks & both disks are currently in use by SVM. So my first task is to make one disk free where I can have my rpool created and creating ZFS boot environment.

My current metadevices are as below -

# metastat -p

d0 -m d20 d10 1

d20 1 1 c1t1d0s0

d10 1 1 c1t0d0s0

d1 -m d11 d21 1

d11 1 1 c1t0d0s1

d21 1 1 c1t1d0s1

d3 -m d23 d13 1

d23 1 1 c1t1d0s3

d13 1 1 c1t0d0s3

d30 -p d4 -o 2097216 -b 2097152

d4 -m d14 d24 1

d14 1 1 c1t0d0s4

d24 1 1 c1t1d0s4

d31 -p d4 -o 32 -b 2097152

My disks are - c1t0d0 & c1t1d0.

So, let us de-attach the mirror for all filesystems.

Detach the metadevices from c1t1d0

# metadetach d0 d20

# metadetach d3 d23

# metadetach d1 d21

# metadetach d4 d24

# metastat -p

d0 -m d10 1

d10 1 1 c1t0d0s0

d1 -m d11 1

d11 1 1 c1t0d0s1

d3 -m d13 1

d13 1 1 c1t0d0s3

d24 1 1 c1t1d0s4 >>>>> unused metadevices

d23 1 1 c1t1d0s3 >>>>> unused metadevices

d21 1 1 c1t1d0s1 >>>>> unused metadevices

d20 1 1 c1t1d0s0 >>>>> unused metadevices

d30 -p d4 -o 2097216 -b 2097152

d4 -m d14 1

d14 1 1 c1t0d0s4

d31 -p d4 -o 32 -b 2097152

Now clear the metadevices so that we will have free disk for creating root pool.

# for i in 0 1 3 4; do

> metaclear d2$i

> done

d20: Concat/Stripe is cleared

d21: Concat/Stripe is cleared

d23: Concat/Stripe is cleared

d24: Concat/Stripe is cleared

Now remove metadb from c1t1d0

# metadb -f -d /dev/dsk/c1t1d0s7

Alright, now we have free disk now so let us create the new root pool. Before creating root pool make sure you have formatted disk with SMI label and reconfigure your

slices so that s0 consists of the whole disk.

# zpool create rpool c1t1d0s0

We can now create a new boot environment named Solaris10ZFS that is a copy of the current one on our newly created ZFS pool named rpool.

# lucreate -n Solaris10ZFS -p rpool

Analyzing system configuration.

Comparing source boot environment

system(s) you specified for the new boot environment. Determining which

file systems should be in the new boot environment.

Updating boot environment description database on all BEs.

Updating system configuration files.

Creating configuration for boot environment

Source boot environment is

Creating boot environment

Creating file systems on boot environment

Creating

Populating file systems on boot environment

Checking selection integrity.

Integrity check OK.

Populating contents of mount point .

Copying.

Creating shared file system mount points.

Creating compare databases for boot environment

Creating compare database for file system .

Creating compare database for file system .

Creating compare database for file system .

Updating compare databases on boot environment

Making boot environment

Creating boot_archive for /.alt.tmp.b-P0g.mnt

updating /.alt.tmp.b-P0g.mnt/platform/sun4u/boot_archive

Population of boot environment

Creation of boot environment

# lustatus

Boot Environment Is Active Active Can Copy

Name Complete Now On Reboot Delete Status

-------------------------- -------- ------ --------- ------ ----------

Sol10 yes yes yes no -

Solaris10ZFS yes no no yes -

Now let's activate the boot environment created for ZFS root.

# luactivate Solaris10ZFS

**********************************************************************

The target boot environment has been activated. It will be used when you

reboot. NOTE: You MUST NOT USE the reboot, halt, or uadmin commands. You

MUST USE either the init or the shutdown command when you reboot. If you

do not use either init or shutdown, the system will not boot using the

target BE.

**********************************************************************

In case of a failure while booting to the target BE, the following process

needs to be followed to fallback to the currently working boot environment:

1. Enter the PROM monitor (ok prompt).

2. Change the boot device back to the original boot environment by typing:

setenv boot-device c1t0d0

3. Boot to the original boot environment by typing:

boot

**********************************************************************

Modifying boot archive service

Activation of boot environment

Now lustatus will show below output -

# lustatus

Boot Environment Is Active Active Can Copy

Name Complete Now On Reboot Delete Status

-------------------------- -------- ------ --------- ------ ----------

Sol10 yes yes no no -

Solaris10ZFS yes no yes no -

Now let's boot to the ZFS boot environment

# sync;sync;sync;init 6

It's a good idea to have a console connection handy as you can see the console messges/errors while reboot and also in case the server is not responsive then you can have console to troubleshoot it.

Nice! Server came up after reboot and now it is on ZFS root. However I still have old BE available on system and it's a good practice to keep it on system while application/database team give go ahead confirmation. Once everything is fine then go ahead and delete old BE if you wish. Deleting old BE will free up the disk and then you can use that disk to mirror it to existing ZFS root pool.

After reboot you can see lustatus output -

# lustatus

Boot Environment Is Active Active Can Copy

Name Complete Now On Reboot Delete Status

-------------------------- -------- ------ --------- ------ ----------

Sol10 yes no no yes -

Solaris10ZFS yes yes yes no -

Here, you can see Can Delete field is "yes".

Also you can see the ZFS filesystems and zpool details -

# zpool list

NAME SIZE USED AVAIL CAP HEALTH ALTROOT

rpool 33.8G 5.46G 28.3G 16% ONLINE -

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 9.46G 23.8G 98K /rpool

rpool/ROOT 4.46G 23.8G 21K /rpool/ROOT

rpool/ROOT/Solaris10ZFS 4.46G 23.8G 4.46G /

rpool/dump 1.00G 23.8G 1.00G -

rpool/swap 4.00G 27.8G 16K -

# zpool status

pool: rpool

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

c1t1d0s0 ONLINE 0 0 0

errors: No known data errors

Ok. I still have few filesystems like /home, /opt on metadevices so let's create ZFS filesystems and rsync/dump UFS filesystems to ZFS ones.

# zfs create -o mountpoint=/mnt/home -o quota=2g rpool/home

# zfs create -o mountpoint=/mnt/opt quota=1g rpool/opt

What I'm doing here is, for temporary purpose I have set the mount points for rpool/home & rpool/opt to /mnt/home & /mnt/opt because there are directories which are already mounted on metadevices by same name. After copying over the data I'm going to change the mount points.

Okay, now it's time to copy over the data. I'm using rsync for this purpose. One can also use ufsdump utility.

# rsync -axP --delete /home/ /mnt/home/

# rsync -axP /opt/ /mnt/opt/

Cool... now all the data is copied to ZFS filesystem and we are ok to clear up these metadevices.

Ok, So now I will go ahead and delete old BE safely as all data has been copied and verified by the application owner.

# ludelete Sol10 && lustatus

Determining the devices to be marked free.

Updating boot environment configuration database.

Updating boot environment description database on all BEs.

Updating all boot environment configuration databases.

Boot environment

Boot Environment Is Active Active Can Copy

Name Complete Now On Reboot Delete Status

-------------------------- -------- ------ --------- ------ ----------

Solaris10ZFS yes yes yes no -

Now will will clear all the metadevices so that the disk hosting old BE will be marked as a free to use and then we can add it to existing rpool as a mirror disk.

The metadevices stats are as below -

# metastat -p

d0 -m d10 1

d10 1 1 c1t0d0s0

d1 -m d11 1

d11 1 1 c1t0d0s1

d3 -m d13 1

d13 1 1 c1t0d0s3

d30 -p d4 -o 2097216 -b 2097152

d4 -m d14 1

d14 1 1 c1t0d0s4

d31 -p d4 -o 32 -b 2097152

unmount the metadevices for /home & /opt

#umount /home;umount /opt

Remove or comment out entries from vfstab file and clear them out.

# for i in 0 1 3 4; do metaclear -r d${i}; done

d0: Mirror is cleared

d10: Concat/Stripe is cleared

d1: Mirror is cleared

d11: Concat/Stripe is cleared

d3: Mirror is cleared

d13: Concat/Stripe is cleared

We also have few soft partitions so we are now clearing up them now.

metastat output is as below -

# metastat -p

d30 -p d4 -o 2097216 -b 2097152

d4 -m d14 1

d14 1 1 c1t0d0s4

d31 -p d4 -o 32 -b 2097152

# metaclear d30

d30: Soft Partition is cleared

# metaclear d31

d31: Soft Partition is cleared

# metaclear d14

d14: Concat/Stripe is cleared

# metaclear d4

d4: Mirror is cleared

Remove the metadb

# metadb -d -f /dev/dsk/c1t0d0s7

Verify you do not left with any SVM metadb using metadb -i command, after executing metadb -i command it should return nothing.

All right, now we have got the free disk.

Our next job is to add free disk to ZFS rpool as a mirror disk, to do so we will first format the disk c0t1d0 and make slice 0 encompass the whole disk. Write out the label, and get back to the prompt. Now, we need to make our single-disk ZPool into a two-way mirror.

# zpool status -v

pool: rpool

state: ONLINE

scrub: scrub completed after 0h4m with 0 errors on Mon Nov 30 00:00:43 2009

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

c1t1d0s0 ONLINE 0 0 0

errors: No known data errors

Let’s attach the 2nd disk to rpool

# zpool attach rpool c1t1d0s0 c1t0d0s0

Please be sure to invoke installboot(1M) to make 'c1t0d0s0' bootable.

This sets up the mirror, and automatically starts the resilvering (syncing) process. You can monitor its progress by running 'zpool status'.

# zpool status

pool: rpool

state: ONLINE

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scrub: resilver in progress for 0h1m, 23.01% done, 0h4m to go

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror ONLINE 0 0 0

c1t1d0s0 ONLINE 0 0 0

c1t0d0s0 ONLINE 0 0 0 1.30G resilvered

errors: No known data errors

The final step is to actually make c1t0d0 bootable in case c1t1d0 fails.

# installboot -F zfs /usr/platform/`uname -i`/lib/fs/zfs/bootblk /dev/rdsk/c1t0d0s0

Don't forget to change the mount points of /home & /opt as currently those are mounted on /mnt mount point.

# zfs set mountpoint=/home rpool/home

# zfs set mountpoint=/opt rpool/opt

After all this clean up & verification work, just reboot the system once, however this is optional!!!

That's it! This is how you can migrate your all servers from UFS to ZFS. For datacenter environments where numbers of servers are in hundreds or thousands you may thing of having this process automated.

I'm also discovering how we can recover crashed system using ZFS root pool & will soon post the procedure on blog.

Thursday, November 26, 2009

rsync over NFS, issue with permission

We use rsync over NFS to migrate data from one machine to another. We accomplish this by mounting filesystems of the source machine which needs to be migrated to new one, that is target server. On source machine we have entries for filesystems in dfstab file which needs to share & we mount those on target machine like /mnt/nilesh_rjoshi etc etc.. & later we use rsync to copy over them from source to destination server.

# rsync -axP /mnt/nilesh_rjoshi /nilesh_rjoshi

While performing rsync I got errors like -

The problem was NFS share options was not set properly. we need to use NFS option anon.

share -F nfs -o ro=@xx.xx.xx.xx/xx,anon=0 /nilesh_rjoshi/

what anon=uid do?

If a request comes from an unknown user, use uid as the effective user ID.

Note: Root users (uid 0) are always considered ``unknown'' by the NFS server unless they are included in the root option below.

The default value for this option is 65534. Setting anon to 65535 disables anonymous access.

Also if you put value like -1 then disables anonymous access. So if you set anon to -1 it prevents access to root on clients (unless specified using the root option in /etc/exports or dfstab in case of Solaris) and also prevents access to users not defined on the server.

Thanks for solution to My UNIX Guru Alex!

# rsync -axP /mnt/nilesh_rjoshi /nilesh_rjoshi

While performing rsync I got errors like -

rsync: send_files failed to open…Permission denied (13)& at the end of rsync

rsync error: some files could not be transferred (code 23) at main.c(977) [sender=2.6.9]

The problem was NFS share options was not set properly. we need to use NFS option anon.

share -F nfs -o ro=@xx.xx.xx.xx/xx,anon=0 /nilesh_rjoshi/

what anon=uid do?

If a request comes from an unknown user, use uid as the effective user ID.

Note: Root users (uid 0) are always considered ``unknown'' by the NFS server unless they are included in the root option below.

The default value for this option is 65534. Setting anon to 65535 disables anonymous access.

Also if you put value like -1 then disables anonymous access. So if you set anon to -1 it prevents access to root on clients (unless specified using the root option in /etc/exports or dfstab in case of Solaris) and also prevents access to users not defined on the server.

Thanks for solution to My UNIX Guru Alex!

Tuesday, November 24, 2009

Hook up the second drive to SVM to have 2nd submirror to existing SVM configuration.

I have SVM filesystems/partitions with 1-way submirror and I need to add 2nd disk to existing SVM configuration and have 2-way submirror. Below procedure talks about it.

This can be scripted however be honest I'm really bad @ scripting... one of the important area I lacking into!!!

Lets start - below are the available internal disks where all OS data is hosted.

Available disks -

AVAILABLE DISK SELECTIONS:

0. c1t0d0

/pci@8,600000/SUNW,qlc@4/fp@0,0/ssd@w2100000c507bd610,0

1. c1t1d0

/pci@8,600000/SUNW,qlc@4/fp@0,0/ssd@w21000004cf9985c7,0

Current metastat -p output -

# metastat -p

d0 -m d10 1

d10 1 1 c1t0d0s0

d3 -m d13 1

d13 1 1 c1t0d0s3

d1 -m d11 1

d11 1 1 c1t0d0s1

d30 -p d4 -o 2097216 -b 2097152

d4 -m d14 1

d14 1 1 c1t0d0s4

d31 -p d4 -o 32 -b 2097152

Current metadbs -

# metadb -i

flags first blk block count

a m p luo 16 8192 /dev/dsk/c1t0d0s7

a p luo 8208 8192 /dev/dsk/c1t0d0s7

a p luo 16400 8192 /dev/dsk/c1t0d0s7

Time to get our hands dirty! Let's add 2nd disk as a mirror to SVM

We start with slicing the second drive in the same way as our first drive, the master.

# prtvtoc /dev/rdsk/c1t0d0s2 | fmthard -s - /dev/rdsk/c1t1d0s2

fmthard: New volume table of contents now in place.

No need to newfs the second drive slices here, that will automaticaly done by the mirror syncing later.

We can now setup our metadbs on 2nd disk which needs to added.

# metadb -a -f -c3 c1t1d0s7

Now you can see metadbs looks like as follows -

# metadb -i

flags first blk block count

a m p luo 16 8192 /dev/dsk/c1t0d0s7

a p luo 8208 8192 /dev/dsk/c1t0d0s7

a p luo 16400 8192 /dev/dsk/c1t0d0s7

a u 16 8192 /dev/dsk/c1t1d0s7

a u 8208 8192 /dev/dsk/c1t1d0s7

a u 16400 8192 /dev/dsk/c1t1d0s7

PS: I'm not sure why metadb for 2nd disk has flags like this? replica's location not patched in kernel somehow. Later update!!! Humm.... After sync & reboot metadb's for 2nd disk has flags same like 1st disk...

Now we go to setup the initial metadevices.

# metainit -f d20 1 1 c1t1d0s0

# metainit -f d21 1 1 c1t1d0s1

# metainit -f d23 1 1 c1t1d0s3

# metainit -f d24 1 1 c1t1d0s4

# metastat -p

d0 -m d10 1

d10 1 1 c1t0d0s0

d3 -m d13 1

d13 1 1 c1t0d0s3

d1 -m d11 1

d11 1 1 c1t0d0s1

d24 1 1 c1t1d0s4

d23 1 1 c1t1d0s3

d21 1 1 c1t1d0s1

d20 1 1 c1t1d0s0

d30 -p d4 -o 2097216 -b 2097152

d4 -m d14 1

d14 1 1 c1t0d0s4

d31 -p d4 -o 32 -b 2097152

Now its time to hook up the second drive so we have actualy mirrored slices.

# metattach d0 d20

# metattach d1 d21

# metattach d3 d23

# metattach d4 d24

Now see the metastat -p out put -

#metastat -p

d0 -m d10 d20 1

d10 1 1 c1t0d0s0

d20 1 1 c1t1d0s0

d3 -m d13 d23 1

d13 1 1 c1t0d0s3

d23 1 1 c1t1d0s3

d1 -m d11 d21 1

d11 1 1 c1t0d0s1

d21 1 1 c1t1d0s1

d30 -p d4 -o 2097216 -b 2097152

d4 -m d14 d24 1

d14 1 1 c1t0d0s4

d24 1 1 c1t1d0s4

d31 -p d4 -o 32 -b 2097152

This will take considereble amount of time. Use metastat to check on the progress of the syncing.

All done!

This can be scripted however be honest I'm really bad @ scripting... one of the important area I lacking into!!!

Lets start - below are the available internal disks where all OS data is hosted.

Available disks -

AVAILABLE DISK SELECTIONS:

0. c1t0d0

/pci@8,600000/SUNW,qlc@4/fp@0,0/ssd@w2100000c507bd610,0

1. c1t1d0

/pci@8,600000/SUNW,qlc@4/fp@0,0/ssd@w21000004cf9985c7,0

Current metastat -p output -

# metastat -p

d0 -m d10 1

d10 1 1 c1t0d0s0

d3 -m d13 1

d13 1 1 c1t0d0s3

d1 -m d11 1

d11 1 1 c1t0d0s1

d30 -p d4 -o 2097216 -b 2097152

d4 -m d14 1

d14 1 1 c1t0d0s4

d31 -p d4 -o 32 -b 2097152

Current metadbs -

# metadb -i

flags first blk block count

a m p luo 16 8192 /dev/dsk/c1t0d0s7

a p luo 8208 8192 /dev/dsk/c1t0d0s7

a p luo 16400 8192 /dev/dsk/c1t0d0s7

Time to get our hands dirty! Let's add 2nd disk as a mirror to SVM

We start with slicing the second drive in the same way as our first drive, the master.

# prtvtoc /dev/rdsk/c1t0d0s2 | fmthard -s - /dev/rdsk/c1t1d0s2

fmthard: New volume table of contents now in place.

No need to newfs the second drive slices here, that will automaticaly done by the mirror syncing later.

We can now setup our metadbs on 2nd disk which needs to added.

# metadb -a -f -c3 c1t1d0s7

Now you can see metadbs looks like as follows -

# metadb -i

flags first blk block count

a m p luo 16 8192 /dev/dsk/c1t0d0s7

a p luo 8208 8192 /dev/dsk/c1t0d0s7

a p luo 16400 8192 /dev/dsk/c1t0d0s7

a u 16 8192 /dev/dsk/c1t1d0s7

a u 8208 8192 /dev/dsk/c1t1d0s7

a u 16400 8192 /dev/dsk/c1t1d0s7

PS: I'm not sure why metadb for 2nd disk has flags like this? replica's location not patched in kernel somehow. Later update!!! Humm.... After sync & reboot metadb's for 2nd disk has flags same like 1st disk...

Now we go to setup the initial metadevices.

# metainit -f d20 1 1 c1t1d0s0

# metainit -f d21 1 1 c1t1d0s1

# metainit -f d23 1 1 c1t1d0s3

# metainit -f d24 1 1 c1t1d0s4

# metastat -p

d0 -m d10 1

d10 1 1 c1t0d0s0

d3 -m d13 1

d13 1 1 c1t0d0s3

d1 -m d11 1

d11 1 1 c1t0d0s1

d24 1 1 c1t1d0s4

d23 1 1 c1t1d0s3

d21 1 1 c1t1d0s1

d20 1 1 c1t1d0s0

d30 -p d4 -o 2097216 -b 2097152

d4 -m d14 1

d14 1 1 c1t0d0s4

d31 -p d4 -o 32 -b 2097152

Now its time to hook up the second drive so we have actualy mirrored slices.

# metattach d0 d20

# metattach d1 d21

# metattach d3 d23

# metattach d4 d24

Now see the metastat -p out put -

#metastat -p

d0 -m d10 d20 1

d10 1 1 c1t0d0s0

d20 1 1 c1t1d0s0

d3 -m d13 d23 1

d13 1 1 c1t0d0s3

d23 1 1 c1t1d0s3

d1 -m d11 d21 1

d11 1 1 c1t0d0s1

d21 1 1 c1t1d0s1

d30 -p d4 -o 2097216 -b 2097152

d4 -m d14 d24 1

d14 1 1 c1t0d0s4

d24 1 1 c1t1d0s4

d31 -p d4 -o 32 -b 2097152

This will take considereble amount of time. Use metastat to check on the progress of the syncing.

All done!

Thursday, November 5, 2009

VERITAS Volume Manager frequently used commands

Display disk listings

# vxdisk list

Display volume manager object listings

# vxprint -ht

Display free space in a disk group

# vxdg -g free

List all volume manager tasks currently running on the system

# vxtask list

Add a disk to Volume Manager (devicename = cXtXdX) (prompt driven)

# vxdiskadd

Take a disk offline (first remove the disk from its disk group) (devicename=cXtXdXs2)

#vxdisk offline

Display multipath information

# vxdisk list

Display disk group information

# vxdg list

# vxdg list

REF: http://www.camelrichard.org/topics/VeritasVM/Volume_Manager_CLI_Examples

# vxdisk list

Display volume manager object listings

# vxprint -ht

Display free space in a disk group

# vxdg -g

List all volume manager tasks currently running on the system

# vxtask list

Add a disk to Volume Manager (devicename = cXtXdX) (prompt driven)

# vxdiskadd

Take a disk offline (first remove the disk from its disk group) (devicename=cXtXdXs2)

#vxdisk offline

Display multipath information

# vxdisk list

Display disk group information

# vxdg list

# vxdg list

REF: http://www.camelrichard.org/topics/VeritasVM/Volume_Manager_CLI_Examples

Wednesday, November 4, 2009

df: cannot statvfs /mount_point: I/O error

We were doing storage migration for Sun servers today and after migration I experienced below error on one of our Solaris box which was running VERITAS SFS.

One can view what's wrong using below command -

# vxprint -g datadg

If you execute df -kh command you will see - df: cannot statvfs /mount_point: I/O error

To recover the volume from this, follow the steps below:

1. Run one of these commands.

a) umount the VxVM filesystem

b) vxvol -g datadg stopall

c) vxdg deport datadg

d) vxdg import datadg

e) vxvol -g datadg startall

f) vxvol -g dg_name start vol (state a volume name)

vxvol -g dg_name start vol-L01 (state a sub volume name)

g) vxrecover -g datadg -s

h) vxdg list

Just be sure about things going to work mount the FS and again umount it perform fsck and remount it.

2. Unmount the volume.

3. Run fsck against the file system on the volume.

4. Mount the volume.

it should be OK...

NOTE: THIS METHOD IS ALSO APPLICABLE FOR MOVING THE DISKGROUP. FEW STEPS ARE APPLICABLE FOR MOVING DISKGROUP.

I guess at the time of swapping the storage fiber cables system or in fact VERITAS Volume Manager has internally executed "vxvol -g datadg stopall" command and this command stops only unmounted volumes and mounted volumes are not stopped.df: cannot statvfs /mount_point: I/O error

One can view what's wrong using below command -

# vxprint -g datadg

If you execute df -kh command you will see - df: cannot statvfs /mount_point: I/O error

To recover the volume from this, follow the steps below:

1. Run one of these commands.

a) umount the VxVM filesystem

b) vxvol -g datadg stopall

c) vxdg deport datadg

d) vxdg import datadg

e) vxvol -g datadg startall

f) vxvol -g dg_name start vol (state a volume name)

vxvol -g dg_name start vol-L01 (state a sub volume name)

g) vxrecover -g datadg -s

h) vxdg list

Just be sure about things going to work mount the FS and again umount it perform fsck and remount it.

2. Unmount the volume.

3. Run fsck against the file system on the volume.

4. Mount the volume.

it should be OK...

NOTE: THIS METHOD IS ALSO APPLICABLE FOR MOVING THE DISKGROUP. FEW STEPS ARE APPLICABLE FOR MOVING DISKGROUP.

Wednesday, October 28, 2009

Replacing/Relabeling the Root Pool Disk

Unfortunately one of disk associated to rootpool went crazy and fun started for a day there!!! Let's fix it. BTW now a days I am testing a small part of rpool recovery after system crash... This experience is from rpool recovery and testing project.

One can see belwo shown error if something wrong with the root pool disk -

# zpool status -x

pool: rpool

state: DEGRADED

status: One or more devices could not be opened. Sufficient replicas exist for

the pool to continue functioning in a degraded state.

action: Attach the missing device and online it using 'zpool online'.

see: http://www.sun.com/msg/ZFS-8000-2Q

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

rpool DEGRADED 0 0 0

mirror DEGRADED 0 0 0

c0t0d0s0 UNAVAIL 0 0 0 cannot open

c0t2d0s0 ONLINE 0 0 0

errors: No known data errors

Have bad disk replaced with good one or brand new disk.

# zpool offline rpool c0t0d0s0

# cfgadm -c unconfigure c1::dsk/c0t0d0

Physically replace the primary disk. In my case it is c0t0d0s0.

Now I am labeling/relabeling the disk. Label rpool disk always with SMI label format and not EFI.

# format -e

Searching for disks...done

AVAILABLE DISK SELECTIONS:

0. c0t0d0

/pci@1f,0/ide@d/dad@0,0

1. c0t2d0

/pci@1f,0/ide@d/dad@2,0

Specify disk (enter its number): 0

selecting c0t0d0

[disk formatted, no defect list found]

FORMAT MENU:

disk - select a disk

type - select (define) a disk type

partition - select (define) a partition table

current - describe the current disk

format - format and analyze the disk

repair - repair a defective sector

show - translate a disk address

label - write label to the disk

analyze - surface analysis

defect - defect list management

backup - search for backup labels

verify - read and display labels

save - save new disk/partition definitions

volname - set 8-character volume name

! - execute , then return

quit

format> l

[0] SMI Label

[1] EFI Label

Specify Label type[0]: 0

Ready to label disk, continue? yes

format>

Reconfigure the disk and bring it online, if required.

# cfgadm -c configure c1::dsk/c0t0d0

# zpool online rpool c0t0d0

Before de-attach you can replace it however I dont see much need of this step in my case it failed so I am directly De-attach the bad disk from the pool

# zpool detach rpool c0t0d0s0

See the status

# zpool status -v

pool: rpool

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

c0t2d0s0 ONLINE 0 0 0

errors: No known data errors

Now we have newly added disk to the system and we need to add/attach this to the pool.

# zpool attach rpool c0t2d0s0 c0t0d0s0

Please be sure to invoke installboot(1M) to make 'c0t0d0s0' bootable.

Check the resilvering status of the newly attached disk.

# zpool status rpool

pool: rpool

state: ONLINE

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scrub: resilver in progress for 0h2m, 25.39% done, 0h6m to go

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror ONLINE 0 0 0

c0t2d0s0 ONLINE 0 0 0

c0t0d0s0 ONLINE 0 0 0 1.62G resilvered

errors: No known data errors

After the disk resilvering is complete, install the boot blocks.

# installboot -F zfs /usr/platform/`uname -i`/lib/fs/zfs/bootblk /dev/rdsk/c0t0d0s0

Confirm that you can boot from the replacement disk.

REF: http://www.solarisinternals.com/

One can see belwo shown error if something wrong with the root pool disk -

# zpool status -x

pool: rpool

state: DEGRADED

status: One or more devices could not be opened. Sufficient replicas exist for

the pool to continue functioning in a degraded state.

action: Attach the missing device and online it using 'zpool online'.

see: http://www.sun.com/msg/ZFS-8000-2Q

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

rpool DEGRADED 0 0 0

mirror DEGRADED 0 0 0

c0t0d0s0 UNAVAIL 0 0 0 cannot open

c0t2d0s0 ONLINE 0 0 0

errors: No known data errors

Have bad disk replaced with good one or brand new disk.

# zpool offline rpool c0t0d0s0

# cfgadm -c unconfigure c1::dsk/c0t0d0

Physically replace the primary disk. In my case it is c0t0d0s0.

Now I am labeling/relabeling the disk. Label rpool disk always with SMI label format and not EFI.

# format -e

Searching for disks...done

AVAILABLE DISK SELECTIONS:

0. c0t0d0

/pci@1f,0/ide@d/dad@0,0

1. c0t2d0

/pci@1f,0/ide@d/dad@2,0

Specify disk (enter its number): 0

selecting c0t0d0

[disk formatted, no defect list found]

FORMAT MENU:

disk - select a disk

type - select (define) a disk type

partition - select (define) a partition table

current - describe the current disk

format - format and analyze the disk

repair - repair a defective sector

show - translate a disk address

label - write label to the disk

analyze - surface analysis

defect - defect list management

backup - search for backup labels

verify - read and display labels

save - save new disk/partition definitions

volname - set 8-character volume name

!

quit

format> l

[0] SMI Label

[1] EFI Label

Specify Label type[0]: 0

Ready to label disk, continue? yes

format>

Reconfigure the disk and bring it online, if required.

# cfgadm -c configure c1::dsk/c0t0d0

# zpool online rpool c0t0d0

Before de-attach you can replace it however I dont see much need of this step in my case it failed so I am directly De-attach the bad disk from the pool

# zpool detach rpool c0t0d0s0

See the status

# zpool status -v

pool: rpool

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

c0t2d0s0 ONLINE 0 0 0

errors: No known data errors

Now we have newly added disk to the system and we need to add/attach this to the pool.

# zpool attach rpool c0t2d0s0 c0t0d0s0

Please be sure to invoke installboot(1M) to make 'c0t0d0s0' bootable.

Check the resilvering status of the newly attached disk.

# zpool status rpool

pool: rpool

state: ONLINE

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scrub: resilver in progress for 0h2m, 25.39% done, 0h6m to go

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror ONLINE 0 0 0

c0t2d0s0 ONLINE 0 0 0

c0t0d0s0 ONLINE 0 0 0 1.62G resilvered

errors: No known data errors

After the disk resilvering is complete, install the boot blocks.

# installboot -F zfs /usr/platform/`uname -i`/lib/fs/zfs/bootblk /dev/rdsk/c0t0d0s0

Confirm that you can boot from the replacement disk.

REF: http://www.solarisinternals.com/

Friday, October 23, 2009

Replace Faulty Disk - Solaris Volume Manager

One of disk went crazy this morning let us fix it.

Following is an illustrated how to replace a faulty disk.

1. Check whether there are any replicas on faulty disk, then remove them if any;

# metadb

If yes delete them.

# metadb -d c0t0d0s7

Verify if there are no existing replicas left on faulty disk;

# metadb | grep c0t0d0

2. Run "cfgadm" command to remove the failed disk.

# cfgadm -c unconfigure c1::dsk/c0t0d0

Insert and configure the new disk.

# cfgadm -c configure c1::dsk/c0t0d0 >>>>> Verify that disk is properly configured;

# cfgadm -al

If necessary run following related disk commands:

# devfsadm

# format >>>> verifying new disk

4. Create desired partition table on the new disk with prtvtoc command;

# prtvtoc /dev/rdsk/c0t1d0s2 | fmthard -s - /dev/rdsk/c0t0d0s2 >>>> Make sure disk sizes are same.

5. Recreate replicas on new disk:

# metadb -a -f -c 3 /dev/dsk/c0t0d0s7

6. Run metareplace to enable and resync the new disk.

# metareplace -e d0 c0t0d0s0

In case SVM device-id not up-to-date, run "metadevadm" which will update the new disk device-id.

# metadevadm -u c0t0d0

NOTE: metadevadm is used for update metadevice information. Use this command when the pathname stored in the metadevice state database no longer correctly addresses the device or when a disk drive has had its device ID changed.

- Configuration: -

- - all disk are SCSI disks

- - disks mirror by Solaris Volume Manager

- - faulty disk was c0t0d0, and the other sub-mirror disk was c0t1d0

The following steps and set of commands can be followed during disk replacement:

# format >>>> verifying new disk

Sunday, October 11, 2009

df -kh - df: a line in /etc/mnttab has too many fields

Getting the following error on the "df -h" command -

Solution -

This issue can arise because of reasons like - wrong formatting of vfstab, auto_master files.

If you want to see if a white space is the problem, you should do a "view /etc/mnttab" and then input ":set list". This will display TABS as "^T" symbols. Spaces will show up as spaces. The issue is with files like /etc/vfstab, /etc/auto_master and such are white space delimited already so looking for spaces in there is going to be somewhat fruitless as a space between fields is perfectly acceptable.

Most of the time

Anything found in /etc/mnttab has already been accepted by the kernel as a valid mount. /etc/mnttab is a kernel created and maintained file.

I was having backup copy of vfstab file I simply replaced it with original and issue disappeared.

This is pretty hot tip!

df: a line in /etc/mnttab has too many fields

Solution -

This issue can arise because of reasons like - wrong formatting of vfstab, auto_master files.

If you want to see if a white space is the problem, you should do a "view /etc/mnttab" and then input ":set list". This will display TABS as "^T" symbols. Spaces will show up as spaces. The issue is with files like /etc/vfstab, /etc/auto_master and such are white space delimited already so looking for spaces in there is going to be somewhat fruitless as a space between fields is perfectly acceptable.

Most of the time

Anything found in /etc/mnttab has already been accepted by the kernel as a valid mount. /etc/mnttab is a kernel created and maintained file.

I was having backup copy of vfstab file I simply replaced it with original and issue disappeared.

This is pretty hot tip!

Tuesday, October 6, 2009

AIX Large File support - Problem even if I am on JFS2

Problem -

Had an issue with a large queue file on one of our Production queue managers.

Solution –

Let us change the fsize value to unlimited or -1 [fsize stands for - The largest file that a user can create.]

# lsuser mqm

mqm id=30031 pgrp=mqm groups=mqm,mqbrkrs home=/var/mqm shell=/usr/bin/ksh gecos=MQM Application login=true su=true rlogin=true daemon=true admin=false sugroups=ALL admgroups= tpath=nosak ttys=ALL expires=0 auth1=SYSTEM auth2=NONE umask=2 registry=files SYSTEM=compat logintimes= loginretries=0 pwdwarntime=0 account_locked=false minage=0 maxage=0 maxexpired=-1 minalpha=0 minother=0 mindiff=0 maxrepeats=8 minlen=0 histexpire=0 histsize=0 pwdchecks= dictionlist= fsize=2097151 cpu=-1 data=-1 stack=65536 core=2097151 rss=65536 nofiles=2000 data_hard=-1 time_last_unsuccessful_login=1241892309 tty_last_unsuccessful_login=ssh host_last_unsuccessful_login=asdemq3.sdde.deere.com unsuccessful_login_count=11 roles=

# chuser fsize='-1' mqm

# echo $?

0

# lsuser mqm

mqm id=30031 pgrp=mqm groups=mqm,mqbrkrs home=/var/mqm shell=/usr/bin/ksh gecos=MQM Application login=true su=true rlogin=true daemon=true admin=false sugroups=ALL admgroups= tpath=nosak ttys=ALL expires=0 auth1=SYSTEM auth2=NONE umask=2 registry=files SYSTEM=compat logintimes= loginretries=0 pwdwarntime=0 account_locked=false minage=0 maxage=0 maxexpired=-1 minalpha=0 minother=0 mindiff=0 maxrepeats=8 minlen=0 histexpire=0 histsize=0 pwdchecks= dictionlist= fsize=-1 cpu=-1 data=-1 stack=65536 core=2097151 rss=65536 nofiles=2000 data_hard=-1 time_last_unsuccessful_login=1241892309 tty_last_unsuccessful_login=ssh host_last_unsuccessful_login=asdemq3.sdde.deere.com unsuccessful_login_count=11 roles=

Now login as a mqm user

$ id

uid=30031(mqm) gid=30109(mqm) groups=32989(mqbrkrs)

Now we will test/verify if we can create big files say of size 3G

So, lets create a file of size 1G to be start with

$ /usr/sbin/lmktemp test 1073741824 >>>> lmktemp is the command which can be used to create temp file or else you can also use dd command. Or one can use below small script too however can be optional as we have plenty of options...

#!/usr/bin/perl -w

my $buf = ' ' x (1024 * 1024);

my $i;

open(F,'>mybigfile') || die "can't open file\n$!\n";

for($i=0;$i<2048;$i++){

print F $buf;

}

close(F);

Create a second 1GB file:

$ cp test test01

To create a 2GB file, append the first file to the second file:

$ cat test >> test01

To create a 3GB file, append the first file to the second file again:

$ cat test >> test01

$ ls -l

total 8388608

drwxrwx--- 3 mqm mqm 256 Dec 16 2007 MQGWP2

drwxr-xr-x 2 mqm mqm 256 Jun 20 2007 lost+found

-rw-rw-r-- 1 mqm mqm 1073741824 Oct 06 09:23 test

-rw-rw-r-- 1 mqm mqm 3221225472 Oct 06 09:24 test01

Cool, now I can create big file after changing fsize value to -1.

Had an issue with a large queue file on one of our Production queue managers.

Solution –

Let us change the fsize value to unlimited or -1 [fsize stands for - The largest file that a user can create.]

# lsuser mqm

mqm id=30031 pgrp=mqm groups=mqm,mqbrkrs home=/var/mqm shell=/usr/bin/ksh gecos=MQM Application login=true su=true rlogin=true daemon=true admin=false sugroups=ALL admgroups= tpath=nosak ttys=ALL expires=0 auth1=SYSTEM auth2=NONE umask=2 registry=files SYSTEM=compat logintimes= loginretries=0 pwdwarntime=0 account_locked=false minage=0 maxage=0 maxexpired=-1 minalpha=0 minother=0 mindiff=0 maxrepeats=8 minlen=0 histexpire=0 histsize=0 pwdchecks= dictionlist= fsize=2097151 cpu=-1 data=-1 stack=65536 core=2097151 rss=65536 nofiles=2000 data_hard=-1 time_last_unsuccessful_login=1241892309 tty_last_unsuccessful_login=ssh host_last_unsuccessful_login=asdemq3.sdde.deere.com unsuccessful_login_count=11 roles=

# chuser fsize='-1' mqm

# echo $?

0

# lsuser mqm

mqm id=30031 pgrp=mqm groups=mqm,mqbrkrs home=/var/mqm shell=/usr/bin/ksh gecos=MQM Application login=true su=true rlogin=true daemon=true admin=false sugroups=ALL admgroups= tpath=nosak ttys=ALL expires=0 auth1=SYSTEM auth2=NONE umask=2 registry=files SYSTEM=compat logintimes= loginretries=0 pwdwarntime=0 account_locked=false minage=0 maxage=0 maxexpired=-1 minalpha=0 minother=0 mindiff=0 maxrepeats=8 minlen=0 histexpire=0 histsize=0 pwdchecks= dictionlist= fsize=-1 cpu=-1 data=-1 stack=65536 core=2097151 rss=65536 nofiles=2000 data_hard=-1 time_last_unsuccessful_login=1241892309 tty_last_unsuccessful_login=ssh host_last_unsuccessful_login=asdemq3.sdde.deere.com unsuccessful_login_count=11 roles=

Now login as a mqm user

$ id

uid=30031(mqm) gid=30109(mqm) groups=32989(mqbrkrs)

Now we will test/verify if we can create big files say of size 3G

So, lets create a file of size 1G to be start with

$ /usr/sbin/lmktemp test 1073741824 >>>> lmktemp is the command which can be used to create temp file or else you can also use dd command. Or one can use below small script too however can be optional as we have plenty of options...

#!/usr/bin/perl -w

my $buf = ' ' x (1024 * 1024);

my $i;

open(F,'>mybigfile') || die "can't open file\n$!\n";

for($i=0;$i<2048;$i++){

print F $buf;

}

close(F);

Create a second 1GB file:

$ cp test test01

To create a 2GB file, append the first file to the second file:

$ cat test >> test01

To create a 3GB file, append the first file to the second file again:

$ cat test >> test01

$ ls -l

total 8388608

drwxrwx--- 3 mqm mqm 256 Dec 16 2007 MQGWP2

drwxr-xr-x 2 mqm mqm 256 Jun 20 2007 lost+found

-rw-rw-r-- 1 mqm mqm 1073741824 Oct 06 09:23 test

-rw-rw-r-- 1 mqm mqm 3221225472 Oct 06 09:24 test01

Cool, now I can create big file after changing fsize value to -1.

Upgrade Veritas Storage foundation 4.1 to 5.0 on Sun Solaris

All right, After a long time I finally back on blog. Little busy with my house shifting work and setting it up along with managing office work... It was real busy week... Today I have a task of Veritas Volume Manager/Veritas Storage Foundation upgrade work so thought having this information on the blog.

let me admit before I start - I am not an expert on this product and have very small experience with this product.

I am upgrading Veritas Storage foundation 4.1 to 5.0 on Sun Solaris 5.9, below steps guide on "how to?"

1. Save your existing configuration at some safe location. This document does not consider rootdg as we dont use rootdg at all. What we will concentrate on is datadg.

Lets see what is current Veritas Volume Manager version is -

# pkginfo -l VRTSvxvm

PKGINST: VRTSvxvm

NAME: VERITAS Volume Manager, Binaries

CATEGORY: system

ARCH: sparc

VERSION: 4.0,REV=12.06.2003.01.35

BASEDIR: /

VENDOR: VERITAS Software

DESC: Virtual Disk Subsystem

PSTAMP: VERITAS-4.0_MP2_RP7.2:2007-02-22

[.....]

or you can use command -

# pkginfo -l VRTSvxvm |grep VERSION |awk '{print $2}' |awk -F, '{print $1}'

Before upgrade make sure you save your current configuration like license file, vfstab file, df -kh O/P, mount command's O/P and few VxVM commands output like vxprint, vxdisk list, vxdg list etc... This information will help you in case of upgrade fail and backout process.

# cd /etc/vx/licenses/; tar -cvf /home/nilesh_rjoshi/vx_licenses_101109.tar ./* >>>> Take backup of VxVM licenses

# cp -p /etc/vfstab /home/nilesh_rjoshi/ >>>> Backup vfstab file.

# df -kh > /home/nilesh_rjoshi/df_kh_op_101109 >>>> df -kh output

# mount > /home/nilesh_rjoshi/mount_op_101109 >>>> mount command output

# vxprint -ht > /home/nilesh_rjoshi/vxprint_ht_op_101109 >>>> Save vxprint -ht output. vxprint utility displays complete or partial information from records in VERITAS Volume Manager (VxVM) disk group configurations.

# vxprint -g datadg -mvphsrqQ > /home/nilesh_rjoshi/vxprint-g_datadg_mvphsrqQ_op_101109 >>>> In detail O/P of vxprint command

# vxdisk list > /home/nilesh_rjoshi/vxdisk_list_op_101109 >>>> Save O/P of vxdisk, it shows all disks associated with or used by VERITAS Volume MAnager.

# vxdg list > /home/nilesh_rjoshi/vxdg_list_101109 >>>> Backup the data group list

Nice one, I guess now we have enough information backed up and can be used in case of backout if something goes wrong.

Let us start with the upgrade process.

Before proceeding ask application users to stop their applications or if you have authority and knowledge on how to stop applications then please disable Applications & Unmount filesystems.

Below three steps needs to be carried out -

- umount vxfs filesystems

- comment them out of the vfstab

- disable application startup in /etc/rc2.d and /etc/rc3.d

# df -kh

/dev/md/dsk/d0 3.0G 2.4G 481M 84% /

/proc 0K 0K 0K 0% /proc

mnttab 0K 0K 0K 0% /etc/mnttab

fd 0K 0K 0K 0% /dev/fd

/dev/md/dsk/d3 2.0G 1.6G 334M 83% /var

swap 11G 160K 11G 1% /var/run

dmpfs 11G 0K 11G 0% /dev/vx/dmp

dmpfs 11G 0K 11G 0% /dev/vx/rdmp

/dev/md/dsk/d6 992M 8.2M 925M 1% /home

/dev/md/dsk/d5 2.0G 362M 1.5G 19% /tmp

/dev/vx/dsk/datadg/app1_vol

5.0G 2.6G 2.3G 54% /app1

/dev/vx/dsk/datadg/app_db_vol

1.0G 452M 536M 46% /app_db

/dev/vx/dsk/datadg/appdata_vol

40G 10G 28G 28% /app_data

[.....]

# umount /app1; umount /app_db; umount /app_data

Verify if with df -kh to see everything is fine.

# vi /etc/vfstab >>>> Comment out veritas filesystems in vfstab file.

Now disable application startup in /etc/rc2.d and /etc/rc3.d

# mv S90app_tomcat K90app_tomcat

Make sure you have comment out entries in vfstab file for all veritas filesystems, application auto startup is stoped through rc directories, and all veritas filesystems are un-mounted. Next task is export the diskgroup.

# vxvol -g datadg stopall >>>> The vxvol utility performs VERITAS Volume Manager (VxVM) operations on volumes. This is Maintenance command for VERITAS.

# vxdg deport datadg >>>> Disables access to the specified disk group. A disk group cannot be deported if any volumes in the disk group are currently open. When a disk group is deported, the host ID stored on all disks in the disk group are cleared (unless a new host ID is specified with -h), so the disk group is not reimported automatically when the system is rebooted.

# vxdg -t import datadg >>>> Dissassocaite dg from host. Where -t for a temporary name. import option imports a disk group to make the specified disk group available on the local machine.When a disk group is imported, all disks in the disk group are stamped with the host's host ID.

# vxdg deport datadg

Fine, now we are done with all applicable operations on disk group.

Start the installer/upgrader:

Mount the CD/ISO file on the server and start the installation.

Quick note on mounting ISO file on Solaris -

# lofiadm -a /nilesh_rjoshi/VERITAS_Storage_Foundation_5.iso

/dev/lofi/1

# mount -F hsfs /dev/lofi/1 /mnt

Navigate to location

# cd /mnt/Solaris/Veritas/v5.0/sf_50_base

NOTE: BE SURE YOU'RE IN RUNLEVEL 3, THIS WILL NOT WORK IN RUNLEVEL 1

# ./installer

Storage Foundation and High Availability Solutions 5.0

Symantec Product Version Installed Licensed

===========================================================================

Veritas Cluster Server no no

Veritas File System 4.0 yes

Veritas Volume Manager 4.0 yes

Veritas Volume Replicator no no

Veritas Storage Foundation 4.0 yes

Veritas Storage Foundation for Oracle no no

Veritas Storage Foundation for DB2 no no

Veritas Storage Foundation for Sybase no no

Veritas Storage Foundation Cluster File System no no

Veritas Storage Foundation for Oracle RAC no no

Task Menu:

I) Install/Upgrade a Product C) Configure an Installed Product

L) License a Product P) Perform a Pre-Installation Check

U) Uninstall a Product D) View a Product Description

Q) Quit ?) Help

Enter a Task: [I,C,L,P,U,D,Q,?] I

Storage Foundation and High Availability Solutions 5.0

1) Veritas Cluster Server

2) Veritas File System

3) Veritas Volume Manager

4) Veritas Volume Replicator

5) Veritas Storage Foundation

6) Veritas Storage Foundation for Oracle

7) Veritas Storage Foundation for DB2

8) Veritas Storage Foundation for Sybase

9) Veritas Storage Foundation Cluster File System

10) Veritas Storage Foundation for Oracle RAC

11) Veritas Cluster Management Console

12) Web Server for Storage Foundation Host Management

13) Symantec Product Authentication Service

b) Back to previous menu

Select a product to install: [1-13,b,q] 5

Enter the system names separated by spaces on which to install SF: XXXXXX

Checking system licensing

Installing licensing packages

Permanent SF license registered on sdxapp1

Do you want to enter another license key for sdxapp1? [y,n,q] (n) >>>> press enter

Storage Foundation and High Availability Solutions 5.0

installer will upgrade or install the following SF packages:

VRTSperl Veritas Perl 5.8.8 Redistribution

VRTSvlic Veritas Licensing

VRTSicsco Symantec Common Infrastructure

VRTSpbx Symantec Private Branch Exchange

VRTSsmf Symantec Service Management Framework

VRTSat Symantec Product Authentication Service

VRTSobc33 Veritas Enterprise Administrator Core Service

VRTSob Veritas Enterprise Administrator Service

VRTSobgui Veritas Enterprise Administrator

VRTSccg Veritas Enterprise Administrator Central Control Grid

VRTSmh Veritas Storage Foundation Managed Host by Symantec

VRTSaa Veritas Enterprise Administrator Action Agent

VRTSspt Veritas Software Support Tools

SYMClma Symantec License Inventory Agent

VRTSvxvm Veritas Volume Manager Binaries

VRTSdsa Veritas Datacenter Storage Agent

VRTSfspro Veritas File System Management Services Provider

VRTSvmman Veritas Volume Manager Manual Pages

VRTSvmdoc Veritas Volume Manager Documentation

Press [Return] to continue:

[........]

I got below error -

The following errors prevent SF from being installed successfully on XXXXX:

827877 KB is required in the / volume and only 490085 KB is available on XXXXX

installer log files are saved at /opt/VRTS/install/logs/installer-kPOqLK

check which directory or file eating up more disk space -

# find . -name core -xdev -exec ls -l {} \; 2>/dev/null >>>> Find core files

# find . -size +20000 -xdev -exec ls -l {} \; 2>/dev/null

Do the appropriate clean-up and start installer once again.

So make sure your root filesystem has enough space available.

CPI WARNING V-9-1-1303 Installing Veritas Storage Foundation will leave only approximately 5107 KB of free space remaining

in the /var volume on XXXXX.

Do you want to continue? [y,n,q] (n) y

installer is now ready to upgrade SF.

All SF processes that are currently running must be stopped.

Are you sure you want to upgrade SF? [y,n,q] (y) y

Checking for any AP driver issues on XXXXXX ..................................................................... None

Storage Foundation and High Availability Solutions 5.0

Storage Foundation and High Availability Solutions 5.0

Stopping SF: 100%

Shutdown completed successfully on all systems

Storage Foundation and High Availability Solutions 5.0

Uninstalling SF: 100%

Uninstall completed successfully on all systems

Storage Foundation and High Availability Solutions 5.0

Installing SF: 100%

Installation completed successfully on all systems

Upgrade log files and summary file are saved at:

/opt/VRTS/install/logs/installer-XffXHU

CPI WARNING V-9-11-2246 You have completed upgrading VxVM on some or all of the systems. Reboot your systems at this time.

Reboot all systems on which VxFS was installed or upgraded.

shutdown -y -i6 -g0

See the Veritas File System Administrators Guide for information on using VxFS.

At least one package will require a reboot prior to configuration. Please reboot and run installer -configure on the

following systems after reboot has completed:

XXXXXX

Execute '/usr/sbin/shutdown -y -i6 -g0' to properly restart your systems.

Now let us install the patch.

# cd /mnt/Solaris/Veritas/v5.0/sf_50_mp1

# ./installmp

Veritas Maintenance Pack 5.0MP1 Installation Program

Copyright (c) 2007 Symantec Corporation. All rights reserved. Symantec, the Symantec Logo are trademarks or registered

trademarks of Symantec Corporation or its affiliates in the U.S. and other countries. Other names may be trademarks of their

respective owners.

The Licensed Software and Documentation are deemed to be "commercial computer software" and "commercial computer software

documentation" as defined in FAR Sections 12.212 and DFARS Section 227.7202.

Logs for installmp are being created in /var/tmp/installmp-tjSj6m.

Enter the system names separated by spaces on which to install MP1: XXXXX

Initial system check:

Veritas Maintenance Pack 5.0MP1 Installation Program

Checking installed packages on XXXXXX

The following patches will be installed on XXXXXX:

123996-06 to patch package VRTSsmf

123678-04 to patch package VRTSat

122632-02 to patch package VRTSobc33

122631-02 to patch package VRTSob

122633-02 to patch package VRTSobgui

123076-02 to patch package VRTSccg

123079-02 to patch package VRTSmh

123075-02 to patch package VRTSaa

[.......]

Press [Return] to continue:

installmp is now ready to install MP1.

Required 5.0 processes that are currently running will be stopped.

Are you sure you want to install MP1? [y,n,q] (y)

Installer will now stop the process and will install MP1. Basically this will install several Sun patches required for VERITAS packages. It is just a process of fixing bugs!

Add the mp1_rp3 [VRTSvxvm 5.0MP3RP2: Rolling Patch 02 for Volume Manager 5.0MP3]

# cd /mnt/Solaris/Veritas/v5.0/sf_50_mp1_rp3/

# patchadd 123742-04

# patchadd 124361-04

Once all this done the re-import the diskdg

# vxdg import datadg

# vxdg deport datadg

# vxdg import datadg

# vxvol -g datadg startall

Verify with

# vxprint -ht >>>> Verify that the volumes are ENABLED/ACTIVE

Final touchup

Renable filesstems in /etc/vfstab

Re-enable application startup in /etc/rc2.d/ /etc/rc3.d

verify you can mount all filesystems in vfstab with "mount -a"

reboot system one final time: init 6 or shutdown -y -i6 -g0

Here you done. This upgrade is pretty straight forward if everything is good like enough disk space on root, var and tmp, system is well patched etc...

Hope it will be a useful information for someone! Have a great weekend.

let me admit before I start - I am not an expert on this product and have very small experience with this product.

I am upgrading Veritas Storage foundation 4.1 to 5.0 on Sun Solaris 5.9, below steps guide on "how to?"

1. Save your existing configuration at some safe location. This document does not consider rootdg as we dont use rootdg at all. What we will concentrate on is datadg.

Lets see what is current Veritas Volume Manager version is -

# pkginfo -l VRTSvxvm

PKGINST: VRTSvxvm

NAME: VERITAS Volume Manager, Binaries

CATEGORY: system

ARCH: sparc

VERSION: 4.0,REV=12.06.2003.01.35

BASEDIR: /

VENDOR: VERITAS Software

DESC: Virtual Disk Subsystem

PSTAMP: VERITAS-4.0_MP2_RP7.2:2007-02-22

[.....]

or you can use command -

# pkginfo -l VRTSvxvm |grep VERSION |awk '{print $2}' |awk -F, '{print $1}'

Before upgrade make sure you save your current configuration like license file, vfstab file, df -kh O/P, mount command's O/P and few VxVM commands output like vxprint, vxdisk list, vxdg list etc... This information will help you in case of upgrade fail and backout process.

# cd /etc/vx/licenses/; tar -cvf /home/nilesh_rjoshi/vx_licenses_101109.tar ./* >>>> Take backup of VxVM licenses

# cp -p /etc/vfstab /home/nilesh_rjoshi/ >>>> Backup vfstab file.

# df -kh > /home/nilesh_rjoshi/df_kh_op_101109 >>>> df -kh output

# mount > /home/nilesh_rjoshi/mount_op_101109 >>>> mount command output

# vxprint -ht > /home/nilesh_rjoshi/vxprint_ht_op_101109 >>>> Save vxprint -ht output. vxprint utility displays complete or partial information from records in VERITAS Volume Manager (VxVM) disk group configurations.

# vxprint -g datadg -mvphsrqQ > /home/nilesh_rjoshi/vxprint-g_datadg_mvphsrqQ_op_101109 >>>> In detail O/P of vxprint command

# vxdisk list > /home/nilesh_rjoshi/vxdisk_list_op_101109 >>>> Save O/P of vxdisk, it shows all disks associated with or used by VERITAS Volume MAnager.

# vxdg list > /home/nilesh_rjoshi/vxdg_list_101109 >>>> Backup the data group list

Nice one, I guess now we have enough information backed up and can be used in case of backout if something goes wrong.

Let us start with the upgrade process.

Before proceeding ask application users to stop their applications or if you have authority and knowledge on how to stop applications then please disable Applications & Unmount filesystems.

Below three steps needs to be carried out -

- umount vxfs filesystems

- comment them out of the vfstab

- disable application startup in /etc/rc2.d and /etc/rc3.d

# df -kh

/dev/md/dsk/d0 3.0G 2.4G 481M 84% /

/proc 0K 0K 0K 0% /proc

mnttab 0K 0K 0K 0% /etc/mnttab

fd 0K 0K 0K 0% /dev/fd

/dev/md/dsk/d3 2.0G 1.6G 334M 83% /var

swap 11G 160K 11G 1% /var/run

dmpfs 11G 0K 11G 0% /dev/vx/dmp

dmpfs 11G 0K 11G 0% /dev/vx/rdmp

/dev/md/dsk/d6 992M 8.2M 925M 1% /home

/dev/md/dsk/d5 2.0G 362M 1.5G 19% /tmp

/dev/vx/dsk/datadg/app1_vol

5.0G 2.6G 2.3G 54% /app1

/dev/vx/dsk/datadg/app_db_vol

1.0G 452M 536M 46% /app_db

/dev/vx/dsk/datadg/appdata_vol

40G 10G 28G 28% /app_data

[.....]

# umount /app1; umount /app_db; umount /app_data

Verify if with df -kh to see everything is fine.

# vi /etc/vfstab >>>> Comment out veritas filesystems in vfstab file.

Now disable application startup in /etc/rc2.d and /etc/rc3.d

# mv S90app_tomcat K90app_tomcat

Make sure you have comment out entries in vfstab file for all veritas filesystems, application auto startup is stoped through rc directories, and all veritas filesystems are un-mounted. Next task is export the diskgroup.

# vxvol -g datadg stopall >>>> The vxvol utility performs VERITAS Volume Manager (VxVM) operations on volumes. This is Maintenance command for VERITAS.

# vxdg deport datadg >>>> Disables access to the specified disk group. A disk group cannot be deported if any volumes in the disk group are currently open. When a disk group is deported, the host ID stored on all disks in the disk group are cleared (unless a new host ID is specified with -h), so the disk group is not reimported automatically when the system is rebooted.

# vxdg -t import datadg >>>> Dissassocaite dg from host. Where -t for a temporary name. import option imports a disk group to make the specified disk group available on the local machine.When a disk group is imported, all disks in the disk group are stamped with the host's host ID.

# vxdg deport datadg

Fine, now we are done with all applicable operations on disk group.

Start the installer/upgrader:

Mount the CD/ISO file on the server and start the installation.

Quick note on mounting ISO file on Solaris -

# lofiadm -a /nilesh_rjoshi/VERITAS_Storage_Foundation_5.iso

/dev/lofi/1

# mount -F hsfs /dev/lofi/1 /mnt

Navigate to location

# cd /mnt/Solaris/Veritas/v5.0/sf_50_base

NOTE: BE SURE YOU'RE IN RUNLEVEL 3, THIS WILL NOT WORK IN RUNLEVEL 1

# ./installer

Storage Foundation and High Availability Solutions 5.0

Symantec Product Version Installed Licensed

===========================================================================

Veritas Cluster Server no no

Veritas File System 4.0 yes

Veritas Volume Manager 4.0 yes

Veritas Volume Replicator no no

Veritas Storage Foundation 4.0 yes

Veritas Storage Foundation for Oracle no no

Veritas Storage Foundation for DB2 no no

Veritas Storage Foundation for Sybase no no

Veritas Storage Foundation Cluster File System no no

Veritas Storage Foundation for Oracle RAC no no

Task Menu:

I) Install/Upgrade a Product C) Configure an Installed Product

L) License a Product P) Perform a Pre-Installation Check

U) Uninstall a Product D) View a Product Description

Q) Quit ?) Help

Enter a Task: [I,C,L,P,U,D,Q,?] I

Storage Foundation and High Availability Solutions 5.0

1) Veritas Cluster Server

2) Veritas File System

3) Veritas Volume Manager

4) Veritas Volume Replicator

5) Veritas Storage Foundation

6) Veritas Storage Foundation for Oracle

7) Veritas Storage Foundation for DB2

8) Veritas Storage Foundation for Sybase

9) Veritas Storage Foundation Cluster File System

10) Veritas Storage Foundation for Oracle RAC

11) Veritas Cluster Management Console

12) Web Server for Storage Foundation Host Management

13) Symantec Product Authentication Service

b) Back to previous menu

Select a product to install: [1-13,b,q] 5

Enter the system names separated by spaces on which to install SF: XXXXXX

Checking system licensing

Installing licensing packages

Permanent SF license registered on sdxapp1

Do you want to enter another license key for sdxapp1? [y,n,q] (n) >>>> press enter

Storage Foundation and High Availability Solutions 5.0

installer will upgrade or install the following SF packages:

VRTSperl Veritas Perl 5.8.8 Redistribution

VRTSvlic Veritas Licensing

VRTSicsco Symantec Common Infrastructure

VRTSpbx Symantec Private Branch Exchange

VRTSsmf Symantec Service Management Framework

VRTSat Symantec Product Authentication Service

VRTSobc33 Veritas Enterprise Administrator Core Service

VRTSob Veritas Enterprise Administrator Service

VRTSobgui Veritas Enterprise Administrator

VRTSccg Veritas Enterprise Administrator Central Control Grid

VRTSmh Veritas Storage Foundation Managed Host by Symantec

VRTSaa Veritas Enterprise Administrator Action Agent

VRTSspt Veritas Software Support Tools

SYMClma Symantec License Inventory Agent

VRTSvxvm Veritas Volume Manager Binaries

VRTSdsa Veritas Datacenter Storage Agent

VRTSfspro Veritas File System Management Services Provider

VRTSvmman Veritas Volume Manager Manual Pages

VRTSvmdoc Veritas Volume Manager Documentation

Press [Return] to continue:

[........]

I got below error -

The following errors prevent SF from being installed successfully on XXXXX:

827877 KB is required in the / volume and only 490085 KB is available on XXXXX

installer log files are saved at /opt/VRTS/install/logs/installer-kPOqLK

check which directory or file eating up more disk space -

# find . -name core -xdev -exec ls -l {} \; 2>/dev/null >>>> Find core files

# find . -size +20000 -xdev -exec ls -l {} \; 2>/dev/null

Do the appropriate clean-up and start installer once again.

So make sure your root filesystem has enough space available.

CPI WARNING V-9-1-1303 Installing Veritas Storage Foundation will leave only approximately 5107 KB of free space remaining

in the /var volume on XXXXX.

Do you want to continue? [y,n,q] (n) y

installer is now ready to upgrade SF.

All SF processes that are currently running must be stopped.

Are you sure you want to upgrade SF? [y,n,q] (y) y

Checking for any AP driver issues on XXXXXX ..................................................................... None

Storage Foundation and High Availability Solutions 5.0

Storage Foundation and High Availability Solutions 5.0

Stopping SF: 100%

Shutdown completed successfully on all systems

Storage Foundation and High Availability Solutions 5.0

Uninstalling SF: 100%

Uninstall completed successfully on all systems

Storage Foundation and High Availability Solutions 5.0

Installing SF: 100%

Installation completed successfully on all systems

Upgrade log files and summary file are saved at:

/opt/VRTS/install/logs/installer-XffXHU

CPI WARNING V-9-11-2246 You have completed upgrading VxVM on some or all of the systems. Reboot your systems at this time.

Reboot all systems on which VxFS was installed or upgraded.

shutdown -y -i6 -g0

See the Veritas File System Administrators Guide for information on using VxFS.

At least one package will require a reboot prior to configuration. Please reboot and run installer -configure on the

following systems after reboot has completed:

XXXXXX

Execute '/usr/sbin/shutdown -y -i6 -g0' to properly restart your systems.

Now let us install the patch.

# cd /mnt/Solaris/Veritas/v5.0/sf_50_mp1

# ./installmp

Veritas Maintenance Pack 5.0MP1 Installation Program

Copyright (c) 2007 Symantec Corporation. All rights reserved. Symantec, the Symantec Logo are trademarks or registered

trademarks of Symantec Corporation or its affiliates in the U.S. and other countries. Other names may be trademarks of their

respective owners.

The Licensed Software and Documentation are deemed to be "commercial computer software" and "commercial computer software

documentation" as defined in FAR Sections 12.212 and DFARS Section 227.7202.

Logs for installmp are being created in /var/tmp/installmp-tjSj6m.

Enter the system names separated by spaces on which to install MP1: XXXXX

Initial system check:

Veritas Maintenance Pack 5.0MP1 Installation Program

Checking installed packages on XXXXXX

The following patches will be installed on XXXXXX:

123996-06 to patch package VRTSsmf

123678-04 to patch package VRTSat

122632-02 to patch package VRTSobc33

122631-02 to patch package VRTSob

122633-02 to patch package VRTSobgui

123076-02 to patch package VRTSccg

123079-02 to patch package VRTSmh

123075-02 to patch package VRTSaa

[.......]

Press [Return] to continue:

installmp is now ready to install MP1.

Required 5.0 processes that are currently running will be stopped.

Are you sure you want to install MP1? [y,n,q] (y)

Installer will now stop the process and will install MP1. Basically this will install several Sun patches required for VERITAS packages. It is just a process of fixing bugs!

Add the mp1_rp3 [VRTSvxvm 5.0MP3RP2: Rolling Patch 02 for Volume Manager 5.0MP3]

# cd /mnt/Solaris/Veritas/v5.0/sf_50_mp1_rp3/

# patchadd 123742-04

# patchadd 124361-04

Once all this done the re-import the diskdg

# vxdg import datadg

# vxdg deport datadg

# vxdg import datadg

# vxvol -g datadg startall

Verify with

# vxprint -ht >>>> Verify that the volumes are ENABLED/ACTIVE

Final touchup

Renable filesstems in /etc/vfstab

Re-enable application startup in /etc/rc2.d/ /etc/rc3.d

verify you can mount all filesystems in vfstab with "mount -a"

reboot system one final time: init 6 or shutdown -y -i6 -g0

Here you done. This upgrade is pretty straight forward if everything is good like enough disk space on root, var and tmp, system is well patched etc...

Hope it will be a useful information for someone! Have a great weekend.

Permanently disabling sendmail on AIX - a small tip

To stop sendmail from starting on reboot, comment it out in the /etc/rc.tcpip file. Then do the stopsrc -s sendmail to end the running copy.

Friday, September 25, 2009

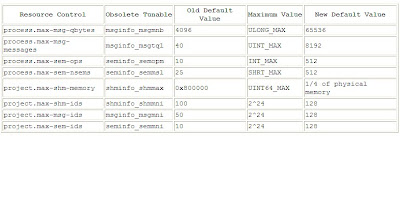

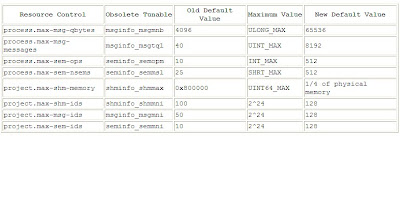

Oracle Kernel Parameters in Solaris 10

In case of Solaris9, edit shared memory and semaphore kernel parameters in /etc/system using the calculations and rules of thumb from the oracle install manual.

The following table identifies the now obsolete IPC tunable and their replacement resource controls.